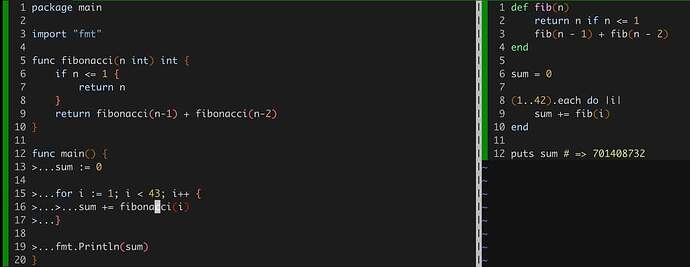

Oooo I love benchmarks! You didn’t post your go code and I don’t want to install ruby right now, but I have crystal 1.0.0 installed currently so let’s try it with your code above and this rust code:

fn fib(n: usize) -> usize {

match n {

0 | 1 => 1,

n => fib(n - 1) + fib(n - 2),

}

}

fn main() {

let sum: usize = (0..42usize).map(fib).sum();

println!("{}", sum);

}

And I compiled crystal like:

❯ shards build --release

Dependencies are satisfied

Building: crystal_fib

Error target crystal_fib failed to compile:

/usr/bin/ld: cannot find -levent (this usually means you need to install the development package for libevent)

collect2: error: ld returned 1 exit status

Uh wut? I’m not using any deps, this is as basic as it gets, crystal doesn’t come with everything it already needs?!? Well let’s get libevent I guess…

I love how it said Dependencies are satisfied in green first, lol, quit lying…

So trying again (it took longer to build than the rust one, about 3 times longer, how?!?):

❯ shards build --release

Dependencies are satisfied

Building: crystal_fib

And ditto with the rust one:

❯ cargo run --release

Compiling testing v0.1.0 (/home/overminddl1/rust/tmp/rust_fib)

Finished release [optimized] target(s) in 0.38s

Running `target/release/rust_fib`

701408732

And I got these times very consistently across a lot of manual runs but I ran them both with hyperfine anyway (a great tool to benchmark multiple cli tools statistically) for the pretty output as well:

❯ time ./crystal_fib/bin/crystal_fib

701408732

./crystal_fib/bin/crystal_fib 4.79s user 0.00s system 100% cpu 4.793 total

❯ time ./rust_fib/target/release/rust_fib

701408732

./rust_fib/target/release/rust_fib 1.79s user 0.00s system 99% cpu 1.792 total

❯ hyperfine --warmup 3 './crystal_fib/bin/crystal_fib' './rust_fib/target/release/testing'

Benchmark #1: ./crystal_fib/bin/crystal_fib

Time (mean ± σ): 4.797 s ± 0.028 s [User: 4.791 s, System: 0.004 s]

Range (min … max): 4.770 s … 4.868 s 10 runs

Benchmark #2: ./rust_fib/target/release/rust_fib

Time (mean ± σ): 1.808 s ± 0.019 s [User: 1.804 s, System: 0.002 s]

Range (min … max): 1.785 s … 1.847 s 10 runs

Summary

'./rust_fib/target/release/rust_fib' ran

2.65 ± 0.03 times faster than './crystal_fib/bin/crystal_fib'

And here are the versions of each I’m using:

❯ crystal --version

Crystal 1.0.0 [dd40a2442] (2021-03-22)

LLVM: 10.0.0

Default target: x86_64-unknown-linux-gnu

❯ rustc --version

rustc 1.51.0 (2fd73fabe 2021-03-23)

So they both should be the most recent, checking, yeah they are both the most recent.

I wonder why the rust code is so much faster than the crystal code… Let’s dump the rust assembly:

❯ RUSTFLAGS="--emit asm -C llvm-args=-x86-asm-syntax=intel" cargo build --release

Compiling testing v0.1.0 (/home/overminddl1/rust/tmp/rust_fib)

Finished release [optimized] target(s) in 0.30s

❯ bat ./target/release/deps/rust_fib-fe8442559c3857a8.s

../*snip to the main function*/

144 │ mov edi, 2

145 │ call _ZN8rust_fib3fib17h2021b2594a90a8b6E

146 │ mov r14, rax

147 │ mov edi, 3

148 │ call _ZN8rust_fib3fib17h2021b2594a90a8b6E

149 │ mov rbx, rax

150 │ add rbx, r14

151 │ mov edi, 4

152 │ call _ZN8rust_fib3fib17h2021b2594a90a8b6E

153 │ mov r14, rax

154 │ add r14, rbx

155 │ mov edi, 5

156 │ call _ZN8rust_fib3fib17h2021b2594a90a8b6E

157 │ mov rbx, rax

158 │ add rbx, r14

.../*snip this is repetitive, the whole loop was unrolled, let's look at the `fib` function then:*/

91 │ _ZN8rust_fib3fib17h2021b2594a90a8b6E:

92 │ .cfi_startproc

93 │ push r14

94 │ .cfi_def_cfa_offset 16

95 │ push rbx

96 │ .cfi_def_cfa_offset 24

97 │ push rax

98 │ .cfi_def_cfa_offset 32

99 │ .cfi_offset rbx, -24

100 │ .cfi_offset r14, -16

101 │ mov r14d, 1

102 │ cmp rdi, 2

103 │ jb .LBB5_4

104 │ mov rbx, rdi

105 │ xor r14d, r14d

106 │ .p2align 4, 0x90

107 │ .LBB5_2:

108 │ lea rdi, [rbx - 1]

109 │ call _ZN8rust_fib3fib17h2021b2594a90a8b6E

110 │ add rbx, -2

111 │ add r14, rax

112 │ cmp rbx, 1

113 │ ja .LBB5_2

114 │ add r14, 1

115 │ .LBB5_4:

116 │ mov rax, r14

117 │ add rsp, 8

118 │ .cfi_def_cfa_offset 24

119 │ pop rbx

120 │ .cfi_def_cfa_offset 16

121 │ pop r14

122 │ .cfi_def_cfa_offset 8

123 │ ret

124 │ .Lfunc_end5:

125 │ .size _ZN8rust_fib3fib17h2021b2594a90a8b6E, .Lfunc_end5-_ZN8rust_fib3fib17h2021b2594a90a8b6E

126 │ .cfi_endproc

So that looks decently optimized though I’m fairly sure I could do it shorter, but this might be faster, eh… Let’s see… does the crystal compiler have a way to output the assembly? Hmm… Although crystal is using LLVM as its optimizer like Rust is too so I’d be surprised if their code wasn’t identical output, but their significant time difference states otherwise… Ah yes! It has the same --emit that rust does, gotta love clang standards:

❯ crystal build --release --emit asm crystal_fib.cr

❯ bat ./crystal_fib.s

/*snip to the main*/

/*Wow there is a *LOT* of extra code here... the crystal executable is 3.2megabytes

unstripped and rust is 785kb unstripped, holy that's a huge difference... rust is

287kb stripped and crystal's is 396kb stripped, still an oddly big difference... */

/* Oh, I see GC stuff in here... right... Finally found main:*/

999 │ .Ltmp147:

1000 │ .loc 7 9 12

1001 │ movl $1, %edi

1002 │ callq "*fib<Int32>:Int32"

1003 │ movl %eax, %ebp

1004 │ movl $2, %edi

1005 │ callq "*fib<Int32>:Int32"

1006 │ movl %eax, %ebx

1007 │ addl %ebp, %ebx

1008 │ jo .LBB0_139

1009 │ movl $3, %edi

1010 │ callq "*fib<Int32>:Int32"

1011 │ addl %eax, %ebx

1012 │ jo .LBB0_139

/*snip more, same kind of stuff although I wonder what's up with the `jo` instruction,

it overall looks more inefficient, how weird... let's look at `fib` since it will

dominate the time anyway, found it:*/

76386 │ .type "*fib<Int32>:Int32",@function

76387 │ "*fib<Int32>:Int32":

76388 │ .Lfunc_begin239:

76389 │ .loc 7 1 0

76390 │ .cfi_startproc

76391 │ pushq %rbp

76392 │ .cfi_def_cfa_offset 16

76393 │ pushq %rbx

76394 │ .cfi_def_cfa_offset 24

76395 │ pushq %rax

76396 │ .cfi_def_cfa_offset 32

76397 │ .cfi_offset %rbx, -24

76398 │ .cfi_offset %rbp, -16

76399 │ movl %edi, %ebx

76400 │ .Ltmp10165:

76401 │ .loc 7 2 5 prologue_end

76402 │ cmpl $1, %edi

76403 │ jg .LBB239_2

76404 │ movl %ebx, %eax

76405 │ jmp .LBB239_4

76406 │ .LBB239_2:

76407 │ .loc 7 0 5 is_stmt 0

76408 │ movb $1, %al

76409 │ .loc 7 2 5

76410 │ testb %al, %al

76411 │ je .LBB239_5

76412 │ leal -1(%rbx), %edi

76413 │ .loc 7 3 5 is_stmt 1

76414 │ callq "*fib<Int32>:Int32"

76415 │ movl %eax, %ebp

76416 │ addl $-2, %ebx

76417 │ .loc 7 3 18 is_stmt 0

76418 │ movl %ebx, %edi

76419 │ callq "*fib<Int32>:Int32"

76420 │ addl %ebp, %eax

76421 │ jo .LBB239_5

76422 │ .LBB239_4:

76423 │ .loc 7 0 0

76424 │ addq $8, %rsp

76425 │ .cfi_def_cfa_offset 24

76426 │ popq %rbx

76427 │ .cfi_def_cfa_offset 16

76428 │ popq %rbp

76429 │ .cfi_def_cfa_offset 8

76430 │ retq

76431 │ .LBB239_5:

76432 │ .cfi_def_cfa_offset 32

76433 │ callq __crystal_raise_overflow@PLT

76434 │ .Ltmp10166:

76435 │ .Lfunc_end239:

76436 │ .size "*fib<Int32>:Int32", .Lfunc_end239-"*fib<Int32>:Int32"

76437 │ .cfi_endproc

Hmm, so yeah crystal isn’t optimizing it anywhere near as well as the rust code, there’s a lot more instructions to do the same work, that would easily add up to the snowball effect of slowdown that it gets over rust…

I wonder… It looks like the crystal code is using 32-bit calls in the machine code, that’s exceptionally weird… The integer size wasn’t defined in the source (why?!?) so let’s define it, changing the crystal source to be this, actually why doesn’t it have an integer size of just “using the platform word size”, oh well, using 64 bit then to match the rust code:

def fib(n : UInt64)

return n if n <= 1_u64

fib(n - 1_u64) + fib(n - 2_64)

end

sum = 0_u64

(1_u64..42_u64).each do |i|

sum += fib(i)

end

puts sum

I’m still fascinating at how long crystal takes to compile, it’s so much longer than rust from a clean compile, very odd… But running it:

❯ shards build --release

Dependencies are satisfied

Building: crystal_fib

❯ time ./bin/crystal_fib

Unhandled exception: Arithmetic overflow (OverflowError)

from src/crystal_fib.cr:3:20 in 'fib'

from src/crystal_fib.cr:9:12 in '__crystal_main'

from /home/overminddl1/.asdf/installs/crystal/1.0.0/share/crystal/src/crystal/main.cr:110:5 in 'main'

from __libc_start_main

from _start

from ???

./bin/crystal_fib 0.01s user 0.01s system 125% cpu 0.014 total

Well, this is interesting, lol. I’m very sure I put u64 on everything, and u64 definitely holds the value… It happens pretty immediately too, even more weird… Wait, it says it’s crashing on the + between the two fib calls, wha? How? Wha? Is the plus trying a 32 bit add on 64 bit values?!?

Wait, I put 2_64, and that compiled and ran and all… fixing that to 2_u64, so the code is now this:

def fib(n : UInt64) : UInt64

return n if n <= 1_u64

fib(n - 1_u64) + fib(n - 2_u64)

end

sum = 0_u64

(1_u64..42_u64).each do |i|

sum += fib(i)

end

puts sum

Now it runs:

❯ shards build --release

Dependencies are satisfied

Building: crystal_fib

❯ time ./bin/crystal_fib

701408732

./bin/crystal_fib 4.53s user 0.01s system 100% cpu 4.541 total

Hmm, still not any faster… To the assembly!

❯ crystal build --release --emit asm crystal_fib.cr

❯ bat ./crystal_fib.s

/* jumping to the fib */

76364 │ .type "*fib<UInt64>:UInt64",@function

76365 │ "*fib<UInt64>:UInt64":

76366 │ .Lfunc_begin239:

76367 │ .loc 7 1 0

76368 │ .cfi_startproc

76369 │ pushq %r14

76370 │ .cfi_def_cfa_offset 16

76371 │ pushq %rbx

76372 │ .cfi_def_cfa_offset 24

76373 │ pushq %rax

76374 │ .cfi_def_cfa_offset 32

76375 │ .cfi_offset %rbx, -24

76376 │ .cfi_offset %r14, -16

76377 │ movq %rdi, %rbx

76378 │ .Ltmp10164:

76379 │ .loc 7 2 5 prologue_end

76380 │ cmpq $1, %rdi

76381 │ ja .LBB239_2

76382 │ movq %rbx, %rax

76383 │ jmp .LBB239_4

76384 │ .LBB239_2:

76385 │ .loc 7 0 5 is_stmt 0

76386 │ movb $1, %al

76387 │ .loc 7 2 5

76388 │ testb %al, %al

76389 │ je .LBB239_5

76390 │ leaq -1(%rbx), %rdi

76391 │ .loc 7 3 5 is_stmt 1

76392 │ callq "*fib<UInt64>:UInt64"

76393 │ movq %rax, %r14

76394 │ addq $-2, %rbx

76395 │ .loc 7 3 22 is_stmt 0

76396 │ movq %rbx, %rdi

76397 │ callq "*fib<UInt64>:UInt64"

76398 │ addq %r14, %rax

76399 │ jb .LBB239_5

76400 │ .LBB239_4:

76401 │ .loc 7 0 0

76402 │ addq $8, %rsp

76403 │ .cfi_def_cfa_offset 24

76404 │ popq %rbx

76405 │ .cfi_def_cfa_offset 16

76406 │ popq %r14

76407 │ .cfi_def_cfa_offset 8

76408 │ retq

76409 │ .LBB239_5:

76410 │ .cfi_def_cfa_offset 32

76411 │ callq __crystal_raise_overflow@PLT

76412 │ .Ltmp10165:

76413 │ .Lfunc_end239:

76414 │ .size "*fib<UInt64>:UInt64", .Lfunc_end239-"*fib<UInt64>:UInt64"

76415 │ .cfi_endproc

Well it’s definitely 64-bit now, but it’s still using extra instructions and extra branches…

It looks like it’s mainly slower because it’s testing for overflow and jumping to an exception throw if it happens… That’s incredibly poor on such numerical sensitive code, I wonder if there’s a way to turn that off (rust handles this a lot better…), doesn’t seem so from some googling… Let’s change the rust integer operation from wrapping to checked to see if it slows down by the same amount, so the code is now:

fn fib(n: usize) -> usize {

match n {

0 | 1 => 1,

n => fib(n.checked_sub(1).unwrap()).checked_add(fib(n.checked_sub(2).unwrap())).unwrap(),

}

}

fn main() {

let sum: usize = (0..42usize).map(fib).sum();

println!("{}", sum);

}

So that should be doing the same thing as crystal is now, first let’s compile (using nightly because checked_* is nightly right now) and run and time it:

❯ cargo +nightly run --release

Compiling rust_fib v0.1.0 (/home/overminddl1/rust/tmp/rust_fib)

Finished release [optimized] target(s) in 0.30s

Running `target/release/rust_fib`

701408732

❯ time ./target/release/testing

701408732

./target/release/testing 1.80s user 0.00s system 99% cpu 1.797 total

No time change, let’s see if the assembly changed:

/*jumping to fib*/

88 │ .section .text._ZN8rust_fib3fib17ha1136bd30e432779E,"ax",@progbits

89 │ .p2align 4, 0x90

90 │ .type _ZN8rust_fib3fib17ha1136bd30e432779E,@function

91 │ _ZN8rust_fib3fib17ha1136bd30e432779E:

92 │ .cfi_startproc

93 │ push r14

94 │ .cfi_def_cfa_offset 16

95 │ push rbx

96 │ .cfi_def_cfa_offset 24

97 │ sub rsp, 8

98 │ .cfi_def_cfa_offset 32

99 │ .cfi_offset rbx, -24

100 │ .cfi_offset r14, -16

101 │ mov eax, 1

102 │ cmp rdi, 2

103 │ jb .LBB5_3

104 │ mov rbx, rdi

105 │ add rdi, -1

106 │ call _ZN8rust_fib3fib17ha1136bd30e432779E

107 │ mov r14, rax

108 │ add rbx, -2

109 │ mov rdi, rbx

110 │ call _ZN8rust_fib3fib17ha1136bd30e432779E

111 │ add rax, r14

112 │ jb .LBB5_2

113 │ .LBB5_3:

114 │ add rsp, 8

115 │ .cfi_def_cfa_offset 24

116 │ pop rbx

117 │ .cfi_def_cfa_offset 16

118 │ pop r14

119 │ .cfi_def_cfa_offset 8

120 │ ret

121 │ .LBB5_2:

122 │ .cfi_def_cfa_offset 32

123 │ lea rdi, [rip + .L__unnamed_2]

124 │ lea rdx, [rip + .L__unnamed_3]

125 │ mov esi, 43

126 │ call qword ptr [rip + _ZN4core9panicking5panic17h3de4db67bd397eb3E@GOTPCREL]

127 │ ud2

128 │ .Lfunc_end5:

129 │ .size _ZN8rust_fib3fib17ha1136bd30e432779E, .Lfunc_end5-_ZN8rust_fib3fib17ha1136bd30e432779E

130 │ .cfi_endproc

So the exception does get thrown in a panic at label .LBB5_2, but there was only one place that rust wasn’t able to prove it can’t happen, the checked_add (the two checked_sub’s it was able to prove it can never happen, because the 0 | 1 case above proves it) so it tests it there, but even then the code is still overall more efficient than the crystal code, very interesting…

So for numerical work crystal isn’t there quite yet compared to other languages. ^.^;

EDIT 1:

And I’m curious about those compiling times, people say rust compiles slow but crystal seems a lot slower, let’s actually test!

❯ # First let's wipe out all of rusts artifacts:

❯ rm -r target

❯ # Then a fresh compile

❯ time cargo build --release

Compiling rust_fib v0.1.0 (/home/overminddl1/rust/tmp/rust_fib)

Finished release [optimized] target(s) in 0.68s

cargo build --release 0.78s user 0.11s system 113% cpu 0.780 total

❯ # Then an incremental no-change compile

❯ time cargo build --release

Finished release [optimized] target(s) in 0.00s

cargo build --release 0.09s user 0.01s system 99% cpu 0.097 total

And now crystal’s compiles:

❯ # First let's wipe out all of crystals artifacts:

❯ rm -r bin

❯ # Then a fresh compile

❯ time shards build --release

Dependencies are satisfied

Building: crystal_fib

shards build --release 1.57s user 0.21s system 156% cpu 1.140 total

❯ # Then an incremental no-change compile

❯ time shards build --release

Dependencies are satisfied

Building: crystal_fib

shards build --release 1.58s user 0.22s system 153% cpu 1.169 total

That ‘felt’ a lot faster of compiling than it did before… does crystal store its artifacts outside of the project directory somewhere? Still much slower than Rusts compiles though, again how very odd especially since they both use LLVM as an optimizer, guess the code quality that is sent to LLVM is very different…

EDIT 2:

I changed the crystal code to remove the types, so it is what was in the original post about this, and now the compile times have changed to what I remember it being:

❯ rm -r bin

❯ time shards build --release

Dependencies are satisfied

Building: crystal_fib

shards build --release 13.10s user 0.20s system 104% cpu 12.773 total

❯ time shards build --release

Dependencies are satisfied

Building: crystal_fib

shards build --release 1.50s user 0.22s system 147% cpu 1.168 total

So it looks like its type inference is VERY slow or something, very weird…

EDIT 3:

Did it with elixir as well, the code:

defmodule Fib do

def fib(0), do: 1

def fib(1), do: 1

def fib(n) do

fib(n-1) + fib(n-2)

end

def run(-1, sum), do: sum

def run(n, sum), do: run(n-1, sum + fib(n))

def run() do

sum = run(41, 0)

IO.puts(sum)

end

end

And running it within the vm:

iex(11)> :timer.tc(Fib, :run, [])

701408732

{7480096, :ok}

So it took about 7 and a half seconds to run, not at all bad for an interpreted language, a LOT better than ruby, lol.

Now even though the BEAM VM is not designed to be shut down, let’s try it by running it straight and shutting it down again as fast as possible, I.E. I made an escript! Running it:

❯ time ./elixir_fib

701408732

./elixir_fib 7.85s user 0.12s system 100% cpu 7.931 total

Not much slower at all, starting up and shutting down the VM (that’s designed not to ever be started up and shut down often, rather it should run all the time) only added about 0.35 seconds to it. ^.^