Hi @andatki! First of all, thank you so much for this amazing book!

I’ve found something that can cause a bit of confusion.

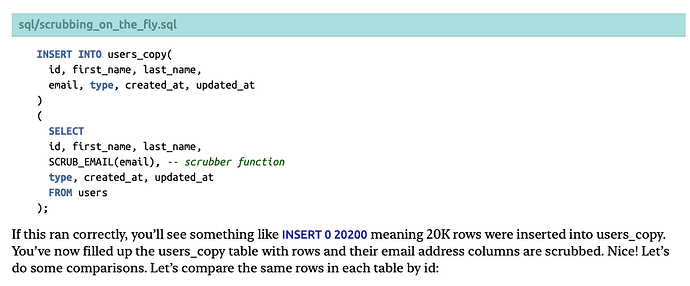

After seeding the data in order and running the bulk_load script, the reader will have about 10M rows in the users table. But on page 56, after running the first statement to copy the scrubbed data into the users_copy table, the operation will fail because of the statement timeout (at least on my computer  ), because the book expects the users table to contain only 20200 records (the ones added with the

), because the book expects the users table to contain only 20200 records (the ones added with therails data_generators:generate_all), but as I mentioned there are about 10M.

This shouldn’t be a problem for the majority of readers, but it can cause confusion and breaks the consistency a bit.

Hi @BraisonCrece. Thanks for posting this. The statement_timeout is used to set the max allowed time for operations to run before they’re cancelled.

What we need to do there is raise the statement_timeout to allow for more time. We can do that in several ways: by modifying the user’s persistent value, by modifying it within a psql session, or modifying it only for the scope of a single transaction. If you used the Rideshare scripts to create the owner or app_user users, you could modify them with a statement like this one:

ALTER ROLE owner SET statement_timeout = '60s';

That would raise the statement timeout to 60 seconds on an ongoing basis. You may need to raise it even more. You can also set it back later if you’d like.

You can also raise the statement timeout within a session:

SET statement_timeout = '60s';

Or you can scope the change to an individual transaction using the LOCAL keyword. To do that, use SET LOCAL as follows:

SET LOCAL statement_timeout = '60s';

With the bigger row count table where operations are taking longer, try raising the statement timeout and performing the operation, and letting me know if that fixes it for you.

Thanks!

1 Like

thank you so much for your response. I truly appreciate the time you’ve taken to explain how to adjust the statement_timeout to allow more time for operations.

What I was trying to show is that the book expects at this point that the users’ table would have around 20k records, but in reality, at this point and after following the previous setup steps it has 10M records

I run the command with the LIMIT 20200. But this discrepancy, in my opinion, can confuse.

In any case, this is a very minor issue, but I wanted to report it.

I’m enjoying the book a lot! A big hug to you.

Hi @BraisonCrece I see. Are you able to say which Beta version you have (or are you updated to the latest?), and which page you’re referring to (Update: I see you said page 56 in the original post) in that screenshot?

We might be able to add some text in like “make sure to reset to get back to 20K rows in rideshare.users if you’ve loaded more”. The 10 million rows from bulk loading is useful elsewhere, but yes ideally we want consistency for the counts that are displayed and the text. Thanks.

I would say I have the latest version, I downloaded the copy last week.

I cannot confirm what page is because depending on the width of the reader (apple books) the page number is different  .

.

What I confirm is that this can be found in the block titled “Understanding Clone and Replace Trade-Offs”.

1 Like

Thanks for reporting this. We’ve added a message to that section that hopefully helps future readers. The message explains that we expect there to be around 20K rows in the users table, and if there are significantly more, the reader should reset (reset instructions are elsewhere).

Hope you’re enjoying the book!

![]()

![]() ), because the book expects the users table to contain only 20200 records (the ones added with the

), because the book expects the users table to contain only 20200 records (the ones added with the