Glad you are enjoying the book! I do recommend looking at the Github source for the A* implementation; it includes a couple of fixes (that will be live in the crate very soon) and performs quite a bit better. Search for “red blob games” on the topic; the author gave me a lot of help with my path-finding code!

In answer to your questions:

-

The in_bounds trait is used by several of bracket-lib’s functions, in particular field of view. When the FOV code casts its light paths, it uses in_bounds to check that it isn’t trying to test a tile that is outside of the map. It’s not so useful in Dijkstra and A*, because get_available_exits hopefully won’t return invalid tiles - but field of view assumes a traditional grid, and doesn’t maintain an exit list. The trait default isn’t quite the same as the implementation in the book (handling of zero tiles), so it has to be overridden to not show bugs. (The Github FOV code is also improved, and will be going live very soon).

-

The book code doesn’t really use the distance, but it’s good to have. If you add diagonal movement, you want to add in diagonal costs of about 1.4 (otherwise pathing functions will dance diagonally because it looks like the shorter path). Dijkstra doesn’t traditionally use pathing costs, but it can - you can get pretty decent results including things like “this swampy tile type costs more to traverse” and keep the same basic functionality but tend towards avoiding the swampy tiles in generated paths. The book doesn’t get to A* (I thought it would, but that content didn’t make the cut).

-

I went with the simple Euclid heuristic because it’s the easiest to explain (and the chapter is mostly teaching you to consume traits and benefit from library functions; the game is the teaching vehicle there). There’s some example code in the bracket-lib pathing section playing with different heuristics. Manhattan can give some interesting behavior - because it’s only counting horizontal + vertical distance, the paths it picks to navigate obstacles can look a bit unnatural for someone on foot (and look very natural for someone driving a vehicle).

So why retain the square-root in the distance heuristic? When you’re comparing distances, it generally doesn’t matter if you have distance or distance^2 so long as you compare like-with-like. It’s actually something I agonized over a bit, and decided to go with teaching the more “natural” approach. I did a fair amount of testing behind-the-scenes before I came to that conclusion:

- When I got started with game development (on an 8-bit BBC Micro) a single square-root call could mean missing a few frames. Even on a 486DX (math co-processor onboard), the impact was pretty painful. So you either used d^2 or a fast approximation function. When I got started with

bracket-lib, I was really surprised by just how fast square roots are on modern hardware. Even on a core2, a square root call is only 9-69 cycles. On recent i5/i7/i9, the call is typically vectorized and is ridiculously fast (even the non-approximate call is only a little slower than a division now). On an i3, sqrt benchmarked at 10 million sqrt calls in 870ms. So when I did a bunch of pathing benchmarks, I found that eliding the sqrt really didn’t change much in terms of performance!

- (Aside: An interesting side-effect of the speed of modern floating point ops is that a vector line using floats can actually outperform integer Bresenham on some systems now!)

- My page count is a little tight, so I didn’t want to expend the extra ink explaining a relatively minor optimization.

- You can get some funky results using distance^2 on pathing functions that total up cost and also compare to distance-to-exit (A*, I’m looking at you!). When you are listing exits with d^2, the natural cost to move in a cardinal direction is 1^2 (1*1) - or 1. The d^2 for moving a single tile is also 1. But when you are 4 tiles from your exit, the cumulative “add distance^2 from exit” is 4 - but d^2 is actually 4^2 = 16. So the algorithm goes somewhat haywire, because you aren’t comparing like with like anymore.

So why check that the closed list entry has a lower total cost than the current step? Its partly there because I copied it from the algorithm description - but it can be useful. Remember that A* traverses any graph, not just a tile map - and it can run into tiles that teleport you somewhere else on the map, or graph nodes that don’t provide sensible linear movement (you can build one-way nodes if you want!). So it is possible to come up with a map that requires some back-tracking. 99.9% of the time, that check isn’t needed.

Likewise, it’s adding the f cost because that was in the original algorithm description I implemented. Most of the time, g would work - but going with the total cost incurred so far discourages back-tracking. If you are just considering the one-hop cost of the parent, you have basically fallen back to a breadth-first search and A* won’t be so pedantic about following what appears to be the shortest total path.

Some examples to try to illustrate this:

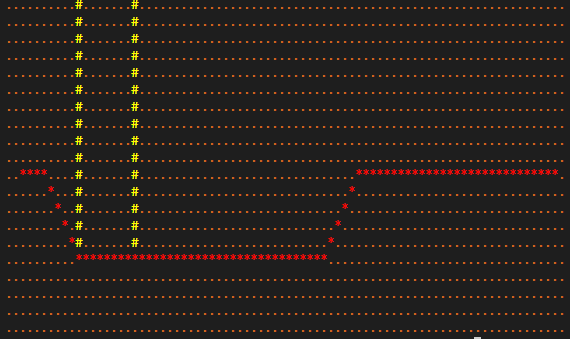

Here’s an A-star traversal using regular Pythagoras:

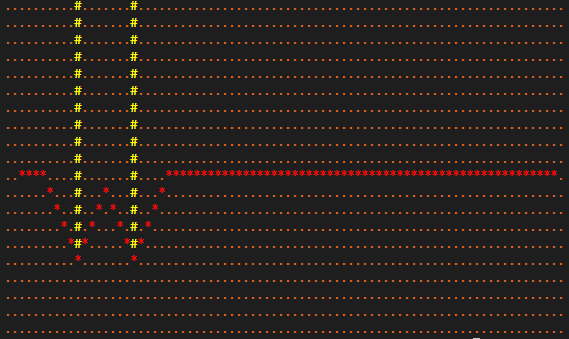

Here’s an A-star traversal using Pythagoras squared:

The difference between “one hop^2” and “total distance^2” becomes a problem and you get a sub-optimal path.

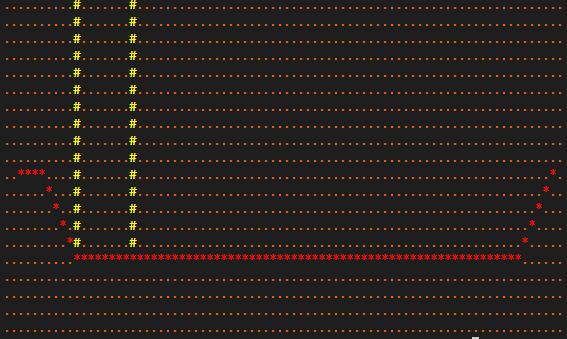

Here’s A-star in Manhattan mode:

In this case, the heuristic favors trying to shorten the vertical distance, so it looks like a better option to take the odd route.

Benchmarking

The benchmark built into bracket-pathfinding randomly generates an 80x20 tile map and uses A* to move across it. Execution times (over 10k iterations with a 3s warmup, on 4-year old core i7):

Pythagoras: 812.80 us (best), 818.56 us (average), 824.65 us (worst)

Pythagoras Squared: 758.92 us (best), 764.96 us (average), 771.61 us (worst)

Manhattan: 823.16 us (best), 827.57 us (average), 832.59 us (worst)

Edit: These times include randomly generating the map!

So in the grand scheme of things, there’s a tiny improvement for not running the square roots on distance: 53.6 us. Overall, that’s not really enough to notice a difference unless you are doing a lot of pathfinding per frame. You have 16,700 us to play with in a frame at 60 FPS, so unless you are recalculating a lot of paths on every tick you really don’t need to worry too much.

Some optimizations tips for the times you do care about 818us

From working on various games over the years, I’ll throw in a little optimization advice on pathfinding:

- You generally don’t need to run it on every frame. If an entity needs to path to a static goal (for example, a dwarf finding his pick in Dwarf Fortress) you can run the A* once and store the path. Then each time the dwarf moves - check to see if the next step on the path is ok. If it is, keep following the path. If it isn’t, try to make a new one.

- Kyzrati (of Cogmind fame) is very fond of an optimization that runs a simple line-of-sight test before calling the full A* system. If LoS exists, there’s your path!

- Look into the “jump point” optimization for A*. It’s hard to implement in the most general sense (which is why its not in bracket-lib), but for some types of game it can make a huge difference. You basically store a list of rooms (great for maps that have them!), and each room stores a path to other rooms. So when you want to generate a path to an entity, you path to the nearest room (or just use the room ID if you are in it) and then path to the destination room. You can see this in action in RimWorld; the settlers sometimes take a slightly odd route to get to a room from which they can path to the destination room. (There’s a YouTube video about how it works, somewhere). It’s not always an optimal route, but it’s fast!

Hope that helped!